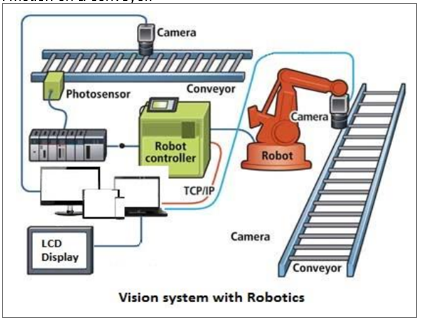

We developed vision system to a robot and you give it eyes, the robot can find objects in its working envelope — reducing the need for complex and expensive fixtures. This increases the flexibility of robotic automation, adapting to variation in part size, shape and location — ultimately reducing cell complexity. With vision systems, cartons can be palletized and de-palletized, components can be assembled and parts lifted off racks and out of bins. It’s even possible to track and pick parts in motion on a conveyor.

Cameras:

- Types of Cameras: Robots can use various types of cameras, including RGB cameras for color information, depth cameras for 3D perception, and infrared cameras for low-light or night vision.

- Placement: Cameras can be mounted on different parts of the robot, such as the head, body, or arms, depending on the application.

Image Processing:

- Image Capture: The vision system captures images or video frames from the cameras.

- Image Pre-processing: Raw images often require pre-processing to enhance features, correct distortions, or filter noise.

- Feature Extraction: Algorithms identify relevant features or patterns in the images.

Computer Vision Algorithms:

- Object Recognition: Detect and identify objects in the environment.

- Object Tracking: Follow the movement of objects over time.

- Depth Sensing: Determine the distance to objects in the robot’s surroundings.

- Semantic Segmentation: Classify different regions of an image into categories.

- Pose Estimation: Determine the position and orientation of objects.

Machine Learning:

- Training Models: Use machine learning techniques to train models for specific tasks like object recognition or facial expression analysis.

- Neural Networks: Deep learning approaches, including convolutional neural networks (CNNs), are often used for complex vision tasks.

Integration with Robot Control:

- Sensor Fusion: Combine data from vision systems with other sensors, such as lidar or radar, for a more comprehensive understanding of the environment.

- Feedback Loop: Vision information can be used to adjust and optimize the robot’s actions in real-time.

Applications:

- Autonomous Navigation: Robots use vision to navigate through environments, avoiding obstacles and reaching destinations.

- Pick and Place: Vision systems enable robots to identify and manipulate objects with precision.

- Quality Control: Inspecting products for defects in manufacturing processes.

- Human-Robot Interaction: Recognizing and responding to human gestures, expressions, and commands.

Challenges:

- Ambient Conditions: Changes in lighting conditions, shadows, and reflections can affect vision performance.

- Real-time Processing: Some applications require fast and real-time processing of visual information.

- Robustness: Ensuring the system is reliable and can handle variations in the environment.

Implementing a vision system in robotics often involves a multidisciplinary approach, combining expertise in computer vision, machine learning, and robotics. Advances in technology continue to enhance the capabilities of vision systems, enabling robots to perform more complex and versatile tasks.